Hyperparameter Optimization

Image Credit: Franceschi, L., et al. “Bilevel programming for hyperparameter optimization and meta-learning.” (2018).

With recent technological advances, high-dimensional datasets have become massively widespread in numerous applications ranging from social sciences to computational biology. In addition, in many statistical problems, the number of unknown parameters can be significantly larger than the number of data samples, thus leading to underdetermined and computationally intractable problems. In order to select the relevant parameters, a lot of work has been devoted to sparsity-inducing norms, thus resulting in non-smooth optimization problems. Note that since these problems are nonsmooth, the corresponding solvers are usually nonsmooth as well.

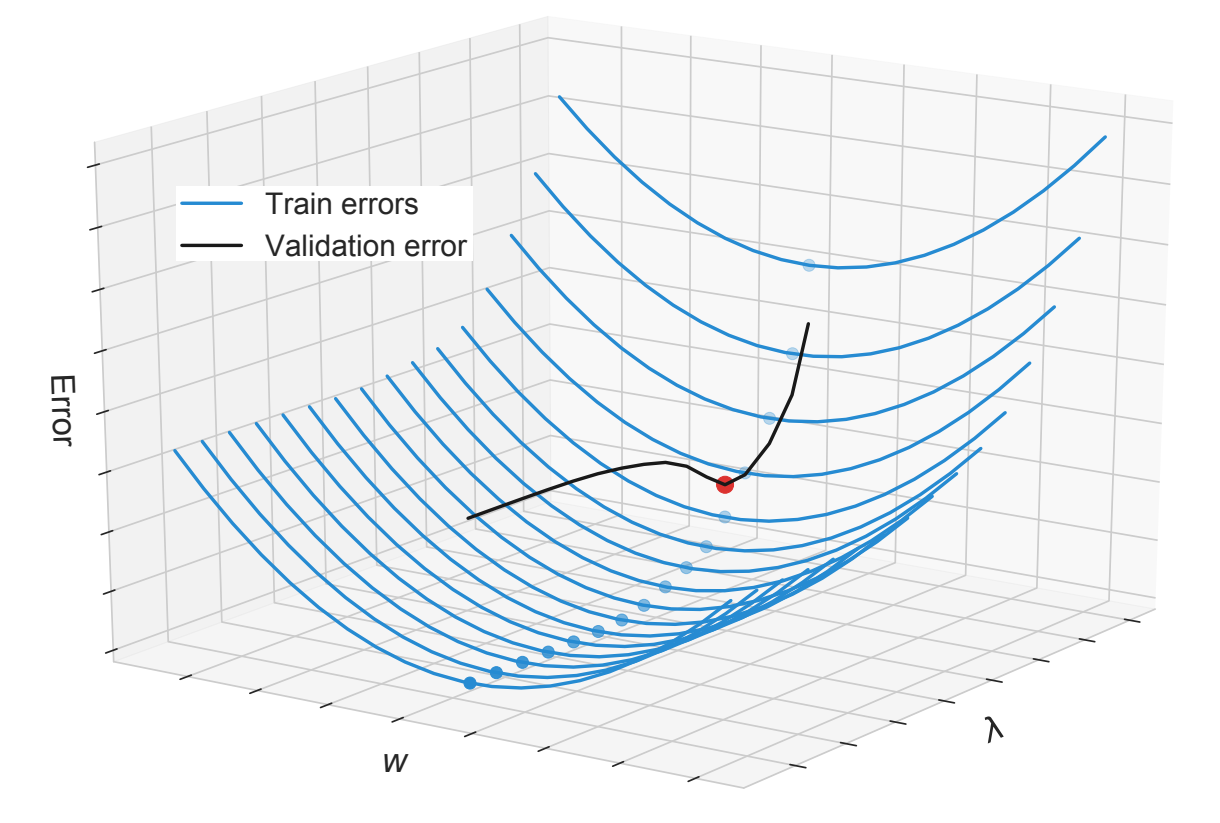

Besides the design of efficient solvers, these models also require to tune many (sometimes thousands of) hyperparameters whose value strongly affect the quality of the solution. As such, a simple grid search quickly becomes out of hand.

The principal contributions of this project are i) the design of smooth algorithms for non-smooth optimization problems and ii) the formulation of the hyperparameter learning problem as a continuous bilevel optimization problem where:

Related Publications

-

Relax and Penalize - a New Bilevel Approach to Mixed-Binary Hyperparameter Optimization,Transactions on Machine Learning Research (TMLR), 2025.

-

Smooth optimization of orthogonal wavelet basis,Conférence sur l'Apprentissage Automatique (CAp), 2021.

-

Unveiling Groups of Related Tasks in Multi-Tasks Learning,International Conference on Pattern Recognition (ICPR), 2020.

-

Bilevel learning of the group Lasso structure,Conference on Neural Information Processing Systems (NeurIPS), 2018.

-

Inferring the group Lasso structure via bilevel optimization,ICML Workshop - Modern Trends in Nonconvex Optimization for Machine Learning, 2018.